Hacking numbers

In this essay we'll explore the correspondence between statistical mechanics, type theory and number systems, introducing ideas from a wide variety of fields. We'll get a sense for some different ways we can represent the different states of a system, along the way trying to motivate why this even matters. Once we establish this correspondence, we'll introduce a novel geometrical interpretation, and explore some of the implications of looking at things from this perverse, asking a lot more questions than we answer; all the while, reveling in the absurdity of it all.

We're covering a lot of ground here – and just scratching the surface – all while trying to assume very little prior knowledge. The more tangential material is collapsed behind toggles, but it's still a lot to take in. Take your time; kick the tires on the geometry. This should be a leisurely tour over some otherwise well-trodden ground, but from a particularly perverse perspective.

Wandering through configuration space

First thing's first, what in the world is a configuration space? Simply put, you can think of it as a space where every point represents a possible state of an entire system. That is, every possible way the given system could be configured. The word space here is used loosely – it may have nothing at all to do with our everyday notions of physical space.

A few examples should make this more clear.

Imagine you were adding some smart switches to your home, and you wanted to build a user interface to keep track of it all. What might this look like? That depends on what switches you want to add, right? These define the different possible states this system can be in.

So let's add some specifics to our contrived example. Say you have 3 switches, each of which can only be in the ON state or the OFF state. Now how might you imagine it?

There are an endless combination of stylistic choices, sure. But let's ignore aesthetic considerations. This really narrows down the design space.

You're probably imagining 3 switches, side by side, right?

As systems get more complex, more options open up. But whatever the system looks like, it's the underlying configuration space which completely constrains the design possibilities.

For simple systems we can put every possible state out in a table. In this case:

| Switch A | Switch B | Switch C |

|---|---|---|

OFF |

OFF |

OFF |

OFF |

OFF |

ON |

OFF |

ON |

OFF |

OFF |

ON |

ON |

ON |

OFF |

OFF |

ON |

OFF |

ON |

ON |

ON |

OFF |

ON |

ON |

ON |

Tabulating every state gets exponentially harder for larger and larger systems. Even still, we can isolate parts of a larger system, and apply the same approaches to these subsystems; these slices of our configuration space.

Learning how to count

There are whole areas of study devoted to answering these same types of counting questions. Eumerative combinatorics, statistical mechanics, and so on. In computer science we have type theory.

Back when I was first learning to code, I remember being absolutely mystified by type systems. The extra syntax was confusing and cryptic. The kinds of errors they were preventing didn't seem all that important, and often seemed to slow down development.

It was this kind of state accounting which I really wanted to wring from my type system. Even before I really understood how build a system, I knew it was this picture of possible states that really mattered. What I like to call the state space picture. This tells us what truly necessary – and what's even possible – within any given system. This picture tells us really necessary – what's even possible – for any system you want to even reason about.

Eventually I came to realize that in a real world system, the database is where it's at. But I'd still wonder why my type system could tell me so little about the possible states of the system I'm hacking on.

An algebra of types?

When I first learned about algebraic data types, also called ADTs for short, I finally found what I was looking for. The types all fit together in a nice little package, and the ideas are generally applicable, even when you're not writing code in a language directly supporting them.

By this point I'd already learned enough about slinging code to know my way around a few different programming languages. Knowing a programming language really means knowing its idiosyncrasies; the idioms; the footguns. That is, all those places where the language has a gun pointed square at the foot of us developers. In my experience, so many of these are weird inconsistencies in the type system (e.g. see here, here, or here).

And yet, here was this system of types, this concept defined independent of any one programming language, which allowed you to reason about and manipulate types as you might manipulate algebraic equations.

A calculus of types?

What's more, you can even do calculus? What sorcery is this?

There's so much more here to explore – you can go much deeper. Believe it or not, you can also take the derivative of an ADT, and get something you can actually make sense of. The derivative of a list is called a "zipper" – a one-hole context. It's just two lists (one of which is in reverse – which might be relevant later...).

What is the derivative of a binary tree? Why do the coefficients have to do with the Catalan numbers?

This is an incredibly rich topic, with some accessible yet still mind-melting details to explore.

But this isn't an essay about type theory. We're just trying to understand the various possible states that simple systems can be in.

Equivalence of types

Let's tweak the prior example a bit to draw out an analogy useful for understanding how to think about these configuration spaces are related.

For this new system, let's add a different kind of switch – a dimmer with 4 state: OFF, LOW, MED, and HI. Perhaps it represents a fan? It doesn't really matter...

In fact, keeping track of these different kinds of switches is already getting tedious. Let's just index their possible states with numbers, so we can write { 0, 1, 2, 3 } for { OFF, LOW, MED, HI }.

Now, back to this new system. Let's say it consists of just one ON/OFF switch and one of these 4-state dimmers. What are all the states?

| On/Off Switch | Fan Switch | 2×4 States |

|---|---|---|

OFF |

OFF |

(0,0) |

OFF |

LOW |

(0,1) |

OFF |

MED |

(0,2) |

OFF |

HI |

(0,3) |

ON |

OFF |

(1,0) |

ON |

LOW |

(1,1) |

ON |

MED |

(1,2) |

ON |

HI |

(1,3) |

This system is clearly different than before. Or is it? This depends on what you consider different.

The difference in the two configuration spaces is just like the difference between the two expressions 2×2×2 and 2×4, and for the same reasons. They both have 8 states in total, so in terms of the kind of accounting done in type theory, they're both equivalent to any system that can sum to 8.

Equivalence of macrostates

Statistical mechanics draws a distinction between microstates and macrostates. The 23 in our initial 2×2×2 example would be microstates, as the state of each switch is preserved, along with the overall order.

Macrostates, on the other hand, are those where order is ignored. Thus, the microstates (0,0,1), (0,1,0), and (1,0,0) would all be considered the same macrostate. As would (0,1,1), (1,0,1) and (1,1,0). The remaining macrostates would be (0,0,0) and (1,1,1).

What about when you flip the order of the two switches? What would you expect for the expression 4×2? Is this substantively different than 2×4? How do you think this might affect the table?

| On/Off Switch | Fan Switch | 4×2 States |

|---|---|---|

OFF |

OFF |

(0,0) |

OFF |

ON |

(1,1) |

LOW |

OFF |

(2,0) |

LOW |

ON |

(3,1) |

MED |

OFF |

(0,0) |

MED |

ON |

(1,1) |

HI |

OFF |

(2,0) |

HI |

ON |

(3,1) |

So what do you think now? Is the 2×4 system and the 4×2 system the same? It would seem that it depends on how you choose list out the states. But what if we use a consistent rule to enumerate them, as we're doing here?

As you may have noticed in the above tables, we're stepping through the states of each component, one by one, from right to left – just as we would a numeral. We are essentially indexing each state with the digits of a mixed radix number system. Each possible mixed radix system will have its own ordering of elements, which dictates the positions of the points in our configuration space.

Alternative enumerations?

Can you think of any way alternative approaches to consistently enumerating all the states?

One in particular could useful – the Residue Number Systems. This may only make sense when the sizes of all components are coprime, but it does open up a totally different strategy for enumerating each item – adding 1 to every component all at once.

This has some surprising and incredibly powerful implications. There's a lot to say about this altogether different approach, but that'll have to wait for another day...

Functions as exponentiation

There's a yet another way to make 8, though – through the more exotic operation of exponentiation: 23. In our type algebra we can give a distinct interpretation to exponentiation. This represents all the different ways we could build a function, or map, from a 3 state system (a type with 3 inhabitants) to a 2-state system (a type with 2 inhabitants). As you might guess from the expression, there are exactly 23 = 8 of these possible maps.

For a 3-state system, we can index each state with this set: { 0, 1, 2 }. For a 2-state system, we just need the set { 0, 1 }.

Now we can write out the first map of all possible values of our 3-states system: { 0, 1, 2 }. We'll map all 3 inputs to the 0 state of our output (OFF):

{ 2 → 0, 1 → 0, 0 → 0 }

Next up, the map which takes the last input, 0, to the 1 state of our output (ON), with everything else going to 0:

{ 2 → 0, 1 → 0, 0 → 1 }

We can get the remaining possible maps by simply continuing to loop through the binary numbers, setting our output states appropriately. Let's look at the complete set of 23 maps:

{ 2 → 0, 1 → 0, 0 → 0 }

{ 2 → 0, 1 → 0, 0 → 1 }

{ 2 → 0, 1 → 1, 0 → 0 }

{ 2 → 0, 1 → 1, 0 → 1 }

{ 2 → 1, 1 → 0, 0 → 0 }

{ 2 → 1, 1 → 0, 0 → 1 }

{ 2 → 1, 1 → 1, 0 → 0 }

{ 2 → 1, 1 → 1, 0 → 1 }

Again, this is every possible map from 3 states to 2. Each line runs through all three input states in the input, mapping each to one of the 2 output states.

You may also notice, if you focus just on the outputs, and read them from top to bottom, that this just runs through the numbers in binary: 0002, 0012, 0102, 0112, 1002, 1012, 1102, 1112.

If we think of each row of output as a 3 digit binary number, the place index of each of these digits can be seen as the input (key) of each map entry.

This is the reason for listing the order of input states across the entries of each map row in descending order – because we tend to write our numerals backward, with the most significant bit first.

Turning these maps inside-out

In case you were wondering what happens when you flip this around, here's the complete set of 32 maps:

{ 1 → 0, 0 → 0 }

{ 1 → 0, 0 → 1 }

{ 1 → 0, 0 → 2 }

{ 1 → 1, 0 → 0 }

{ 1 → 1, 0 → 1 }

{ 1 → 1, 0 → 2 }

{ 1 → 2, 0 → 0 }

{ 1 → 2, 0 → 1 }

{ 1 → 2, 0 → 2 }

As you can see, the outputs are just the base 3 numerals all the way up to 32.

This is how to think about functions in type theory. As you might guess, you can do the same thing for types with an infinite number of inhabitants, like lists.

But this is not an essay about type theory. We're just trying to understand the possible states available for these simple systems.

System composition

What if we wanted to build a system that consisted of two copies of the exact same system? How many states are in this pair of systems? If we use any of our examples from before, you'd have 8×8 = 82 states. The combined states of 3 copies? 8×8×8 = 83 states. Another exponential.

We could be considering any of the systems we've explored with 8 states: 2×2×2, 2×4, 4×2, 23. When it comes to taking powers, building up lists, they should all behave the same; the subtle distinctions in structure and order won't matter. So we'll just write 8 from here.

Now, what if we want to model a list which could be any size? We can just add up all the possible sizes: 80 + 81 + 82 + 83 + 84 + ...

We haven't yet covered the addition operation, we've only discussed various forms of multiplication. But what algebra would be complete without it? Addition in our type algebra, as a disjoint union, can actually be surprisingly subtle at times. But it does exactly what you think it should in this case. This is because every list of a given length can be distinguished from the lists of any other length.

You can read this from left to right as a list containing no copies of our 8-state system (80) or a list containing one copy of our 8-state system (81) plus a list containing two copies of our 8-state system (82), and so on...

Series collapse

For those familiar with power series, you might recognize this as a Taylor series expansion. With some care, you can even treat it as such, and collapse it down: (1-8)-1

What?! Is that a negative... reciprocal type? What could it possibly mean to have a negative number of states? And fractional states? Are there any satisfying interpretations of such things?

These are absolutely delightful questions to explore.

We had already identified the various inhabitants of our finite systems as analogous digits. Now, as we consider all the possible inhabitants of these homogenous lists of any size, the correspondence with the natural numbers in standard positional notation should become apparent.

Byte strings as base 256

A computer program, like the contents of any other file on a computer, can be represented as a list of bytes. A byte is a sequence of 8 bits. So in terms of algebraic data types, this gives us 2×2×2×2×2×2×2×2 = 256 states.

An arbitrarily long list of bytes can be thought of as a numeral written in base 256.

Of course there are many other ways to interpret these 256 options, but we can disregard these details, for now. From the perspective of our type algebra, we just care about the total states.

Our 80 + 81 + 82 + 83 + 84 + ... system will a configuration space that is indistinguishable from the natural numbers written in base 8. This is a type definition for the octal numbers.

Or perhaps more accurately, strings of octal digits. All of this applies just as well to strings, which are nothing more than lists characters. And what's a character? Err...

Okay, at this point we could keep torturing this analogy to explore a whole host of characters and concepts from the land of software, familiar to any grizzled developer, regardless of the the languages they speak.

But this isn't an essay about programming languages. We're just thinking in terms of systems and states, trying to understand the information content of simple systems, and simple combinations of them.

Lists as positional numerals

Over the years, one thing I've found that can help me gain a better understanding of a given idea or concept is when I can map it over to something altogether different. A good analogy can serve as a bridge between two seemingly different worlds. When an analogy is tight enough we can lift anything known to be true from one side of the bridge to the other.

This can be especially enlightening when you know the result, no matter how absurd, must be true. I like to call this game go home universe, you're drunk! And the more I explored the correspondences between states and digits, the more the absurdities piled up.

To make any real progress understanding this amusing mess I figured I needed a better understanding of digits themselves, and the mechanics of number systems.

What even is a digit?

The key to understanding digits lies in modular arithmetic; the modulo function in particular. The output of this function could reasonably be called a digit. The first digit of some numeral written in base $m$, where $m$ is your modulus.

What better way to get a sense of a function that to graph it.

Let's see: $\mod(x, m)$

Triangles. A sawtooth wave. As we vary $m$, the modulus, we grow our shrink the amplitude of this sawtooth wave. Boring but predictable.

You could think of each point on this line as if it's on a circle. You might imagine rolling this up into a cylinder, with the dot just circling, round and round.

That's the effect of mod – it just rolls us back to the beginning. Back to 0 at every factor of $m$, every time division is left with remainder 0.

mod vs. rem

This highlights a subtle difference between the modulo function and the very similar remainder function. The "remainder" is defined to use the sign from the dividend (first argument), while the "modulus" uses the sign from the divisor (second argument).

This distinction only shows up on the negative end of the domain, when $x$ is negative. But this can be frustratingly ambiguous, like a lot of math syntax, and is very likely to trip you up at some point.

We'll use the mod definition, as it's more useful for our purposes:

$$\mod(x,m) := x - m \left \lfloor \frac{x}{m} \right \rfloor$$

But what happens if you flip things around – hold the first argument, the dividend, constant, and look at every modulus, side by side, along the $x$ axis?

Let's use $n$ for the argument, our numerator: $mod(n, x)$

Well that's... different. What even is this thing? Does it have a name?

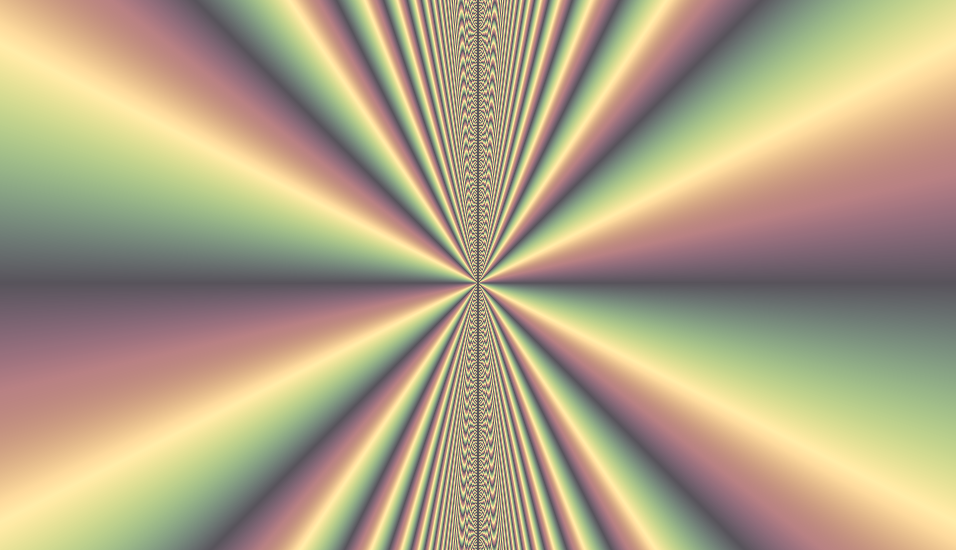

A curious space

How does it behave when you vary the numerator $n$ of our modular division?

What's up with those lines? Do you notice anything about their slopes?

One advantage of looking at mod as a continuous function is we can take a look at the derivative: $\frac{d}{dx} mod(n,x)$

The slopes of these lines are integers, and they break at exactly the right points to trace out a rectangular hyperbola... almost.

Specifically, the lower and upper bonds are the hyperbolas $1-\frac{n}{x}$ and $-\frac{n}{x}$:

What happens when we look at some of the other representatives?

$$mod(n,x) + [-5...4]x$$

Each color represents a distinct set of representatives equivalent to $10 \pmod{x}$. To consider all the equivalent representatives, you can imagine repeating this all the way out to $∞$, in both directions!

That's the funny thing about modular arithmetic: it just keeps cycling. The same point will repeat, vertically, every $x$ distance. This periodicity is the very essence of modular arithmetic. This is true of any $x$ in our plot, as here $x$ represents our divisor – the modulus. This doesn't just apply to integers; it's true for any real $x$!

Each point represents a residue mod x. Specifically, the value in the range from $0$ to $x$, excluding $x$ (which we'll write as $[0,x)$) is called the fundamental residue. But you can think of all the points up and down any given vertical line $x$, separated by a distance $x$, as equivalent. We say they're all members of the same equivalence class mod x.

All these points are equivalent for a given $x$. That is, they are in the same equivalence class mod $x$.

That is, you can treat them all as if they're the same. This is very much like how we think about angles. When considering a rotation, we can split it into two parts: the number of complete turns, and the remaining fraction of a turn left over. The whole number of turns we might call a turning number. The leftover bit is what we think of as an angle.

Mixed fraction analogy

You can split any real number into an integer part and a fractional part.

You have the freedom to choose a denominator for your fractional part, creating a mixed fraction.

You can choose not to reduce your fractions, creating what we call an improper mixed fraction.

If you choose to fix your denominator to some value – say, some integer $n$. The fractional part, if constrained to certain rational numbers, could be thought of as an analog to the integers modulo $n$. The numerator of this fraction could be thought of as representing a residue in $\Z/n\Z$.

Your integer part... remember your integer part? We started by splitting a number into its integer and fractional parts. You can opt to forget the integer part – as we generally do in modular arithmetic – and you'd have a proper mixed fraction., analogous to an angle. But if you keep it around, this reflects the quotient of our modular division, analogous to a turning number.

Fun fact: even when you try to forget it, this ends up encoded in the slope of the line cutting through $x$ in our mod cone. Thus, you can recover this integer part by taking a derivative; a derivative which can only really exist within a continuous treatment of modular division, as we're exploring here.

So we can think of each line $x$ as a circle of circumference $x$ that just wraps back on itself. All these circles, taken together, would then resemble a cone.

What to even call this thing?

This interpretation as a cone is why I tend to call this the mod cone, but only for lack of a better name. If this thing (shape? topological space?) already has a name, I would love to hear it! If not, I'd love suggestions for what to call it!

The geometry of numerals

Start with our mod function, taking each value of $x$ as modulus. Probe its value at each "place", for a chosen "base". Connect up these points.

Each line segment is in perfect analogy with the digit of a numeral written in our chosen base. And we can literally read off the value of each digit from the slope of the line segment.

Let's start with an example in binary; base 2. We sample the value at every power of 2, while we allow the value $n$ to vary, cycling through a range of integers.

Enumeration of base 2 integers, as constructed on the mod cone.

You can read the digits from right to left, with the rightmost nonzero digit being the most significant bit, or MSB – at least, when positive. When negative, note the infinite string of 1s in ever increasing powers. This is called the two's complement representation.

Focus in on a given power of two. At every change, you can see it slip upward one unit. Eventually, as it approaches the line $y=x$ and it runs out of room, it drops back to 0 to start all over again. Explore it yourself.

Reading digits off the mod cone

Here's an interactive version. Feel free to futz around and see how this works. Change the base, $b$. This uniquely represents any number $n$ in any base $b$, as line segments. Each line segment maps precisely to a digit, and the whole number can be read off, place by place, digit by digit.

Interactive mod cone representation of any numeral in any base, defaulting to $37_{10}$.

The details of a particular digit are highlighted in orange. You can sweep through the different places with the orange $p$ slider on the left. You can change the base with the green $b$ slider. The red slider controls $n$.

Or edit it directly for more control. You can tweak the values by hand, rig things up for better viewing, etc.

What do you think happens for bases less than 1? Take a look at $b=\frac{1}{2}$ vs. $b=2$. What do you notice? Try it $b=\frac{1}{b}$ for any $b$. The list digits should appear to reverse. Why do you think this might be?

Stupid digit tricks

There's no shortage of stupid digit tricks like this; little hacks and oddities, mathematical curiosities; the stuff of recreational mathematicians. The word recreational is the shade thrown by professional mathematicians at this of math; at anything remotely related to number systems; anything with even a whiff of numerology.

But there are some very good reasons to explore and catalog all the many digital absurdities. There are so many promising connections to explore between seemingly disparate fields; bridges spanning somewhat distant intellectual archipelagos. This one analogy spelled out here is just a jumping off point.

From here, we can chart a course over some other well-trodden bridges, long mapped but with new perspective, and new tools. For example, we can walk Curry-Howard bridge from type theory to mathematical logic. This is but one of many bridges.

The ability to better model and reason about state spaces – possible worlds – has broad applications. But perhaps more interesting, it has subtle, under-explored implications. The basic idea that any fact true of digits can be carried over to algebraic types.

As you play around in this sandbox, you start to get a visceral feel for these properties. You can see with your own eyes how reciprocating the base reverses our list of digits. You can get some spacial intuition for how the method of complements works, and how this is related to p-adic numbers, with their infinity of nonzero digits with larger and larger exponents.

Could this help us find reasonable interpretations of the more exotic forms we met earlier, e.g. negative and reciprocal types? What else can we learn about counting states?

A universal numeral?

What seems to happen at $b=1$? It all goes to hell, thanks to a division by $0$. Okay, but what happens as you get closer and closer to $b=1$? (You may want to tweak things to add more places, and to suppress the labels for each place.) What do you see when we come at it from above? From below?

Is this mod cone the true unary number system? It's usually claimed that the unary numbers, base 1, is given by the tally system. But wouldn't this more accurately be called Bijective base 1? But digits written on the mod cone approach the mod cone itself as the base approaches 1. Is it the mod cone itself that should be considered the true unary? (Or at least a q-analog?)

This is the metaphysical substrate upon which numerals are etched. And this extends far past the standard positional notation.

There's a whole menagerie of nonstandard positional number systems. It's a fun exercise finding geometrical interpretations in terms of the mod cone.

I'll spare you the comprehensive speed run through this freak show of degeneracy. But just to give a sense, let's look at a very simple example, bijective numeration. This freak comes from a slight tweak in the alphabet of digits we use. As an example, in decimal the set of digits we have available is { 0, 1, 2, 3, 4, 5, 6, 7, 8, 9 }. In bijective base 10, the available digits are { 1, 2, 3, 4, 5, 6, 7, 8, 9, a }, where a has the value 10 (this is typically how we write the digit with the value of 10). Note how the set of digits excludes 0 but includes a value equal to the base – this is the characteristic feature of a bijective number system.

In full generality

Of all the ways you could extend positional notation to generalize it, one of the most flexible is to just allow the base to vary by place for numbers written in your system. These are the mixed radix number systems.

Enumerating integers on the mod cone for the mixed radix system (3,5,7,8).

Here, the integers of the mixed radix system (3,5,7,8) are stepped through, with each digit, associated with its own radix, labeled. The rules of the game are still teh same, but feel free to play around with it. Change up the radix values (the $b_V$ parameter).

Can you think of anything else we might model with a mixed radix system?

One classic example is time. 60 seconds in a minute, 60 minutes in an hour, 12 hours in... a half-day (one turn of the hour hand around the clock face), and two of those in a day.

0.999... problems...

Even just the ever so subtle detail about what you do with points which would land exactly on this line $y=x$, whether they should get sent to the line $y=0$ or stay on the line $y=x$, could be thought of as two distinct systems; each of which can uniquely represent any real number in your chosen base.

As an example, let's look at 0.999... in decimal. Let's start from 0.9, then keep adding 9s:

Clearly this just keeps getting closer and closer to the diagonal line $y=x$, filling in a triangle. But what happens when you use an infinite number of 9s, finally completing the triangle? As is well known, this infinite series sums to exactly 1 – you could probably even prove it to yourself just by reasoning about the geometry here. But what happens when we get to 1? We roll back down to 1.000..., as is the nature of the mod function.

It seems we can't quite represent the triangle 0.999.... Or can we?

We could change up the rules to prefer the infinite series. This way, we preserve the 0.999... triangle, and could represent any infinite string of 9s, but at the cost of being able to represent 1.000..., or any infinite string of 0s.

But we can't have it both ways. At least, not if we want to represent numerals with a single consistent set of rules, as a function, as we're doing here.

And yet, for some reason, when we talk about decimal numbers we smash these two subtly different systems together and call it decimal? Why?! This is completely incoherent. But I digress...

I know its controversial, sure, but it has to be said. To write 0.999... = 1, while technically correct, is no different than to write 9.999... = a. They both look like type errors to my pedantic eyes. In both cases, we have two numerals in two compatible but demonstrably distinct systems!

Hyperextended digits

But okay. If we're going to be so blasé with our digits, why not go all in and allow even more digits?

That's already a thing. Allow me to introduce the hyperbinary numbers. These are just the same as regular binary numbers, with digits 0 and 1, but we toss in an extra digit, 2, for free. And this gives us some extra freedom, with extra paths opening up in how we may construct a given number.

For example, let's look at the number 27. In binary there is exactly one way to write 27:

- 110112 = 1×24 + 1×23 + 1×21 + 1×20 = 16 + 8 + 2 + 1 = 27

In hyperbinary, we still get the one representation available for binary, but two additional paths become available:

- 102112 = 1×24 + 2×22 + 1×21 + 1×20 = 16 + 8 + 2 + 1 = 27

- 22112 = 2×23 + 2×22 + 1×21 + 1×20 = 16 + 8 + 2 + 1 = 27

And we can represent this on the mod cone using exactly the same rules as before. But now, our digit 22 has a slope of 2, allowing our paths to climb up above the line $y=x$ and into the next higher harmonic.

W. T. Fusc?

So how many ways are there, exactly, to represent a given hyperbinary number? Let's list out all the hyperbinary representations for the first dozen integers:

| n | b(n) | hyperbinary representations |

| 0 | 1 | 0 |

| 1 | 1 | 1 |

| 2 | 2 | 10, 2 |

| 3 | 1 | 11 |

| 4 | 3 | 100, 20, 12 |

| 5 | 2 | 101, 21 |

| 6 | 3 | 110, 102, 22 |

| 7 | 1 | 111 |

| 8 | 4 | 1000, 200, 120, 112 |

| 9 | 3 | 1001, 201, 121 |

| 10 | 5 | 1010, 210, 1002, 202, 122 |

| 11 | 2 | 1011, 211 |

The upshot (from this slidedeck):

If one writes out the sequence $b(n)$, which counts the number of hyperbinary representations of $n$, and then makes fractions out of the successive terms in the sequence, then one obtains an enumeration of all positive rational numbers, each in lowest terms, with no repeats!

The sequence $b(n)$ has a name: the $\operatorname{fusc}$ function. And it too has all kinds of interesting properties, which have been studied at length.

Generalizing hyperbinary

As you may have already wondered, why limit ourselves to just one extra digit? What happens when you add more and more extra digits – opening up paths within higher and higher harmonics?

For example, here are the various available paths representing 10 when we allow for an even larger overflow of available digits:

You can read off the digits of line segment using the same rules outlined above.

Is there anything particularly special about binary? Can we play this same game of expanding our alphabet of digits, overflowing the base, for "bases" other than 2? Could this be made to work for non-integer bases? Sure! Try it out it out and see what happens.

You can even play out this game, with all the same geometry, in the fully general case of any arbitrary mixed radix systems.

But are there any other $\operatorname{fusc}$ing functions out there? That is, closed-form expressions capable of counting up the total number of possible representation paths, for any of these other hyperextended systems? Could there be a way to do this in general? I could certainly think of a few things this could be be useful for...

Here we have a laboratory to study a general class of systems we can rig to lack a unique trajectory. If you're into the quantum stuff, these spaces are an untapped abundance of toy models and metaphors to explore.

So many forking paths

There's so much more to say – about this space and many like it, conjured purely from no more than numbers themselves, brimming with realizations of concepts of pure number theory, yet accessible in a way that allows you to see them in a way algebra alone never could. To touch them, to manipulate them.

I’ve heard it said that a good writer is a good direction giver. They know what it takes to tell folks how to get to where they want them to go, without getting them lost anywhere along the way. Every sentence leads into the next, with no u-turns, dead-ends, detours through the culdesacs of the author’s mind.

By this measure, I've clearly failed miserably.

There are so many forking paths here, each with its own character and quirks. Each hiding representations of a whole menagerie of mathematical curiosities, some well-known, others somewhat mysterious, at least to hacker such as myself. It's impossible to chart a straight line course through.

I just hope this essay, sprawling and scattered and unpolished as it may be, helps to highlight some of the richness, the multitudinousness, of this mod cone space, and serves as some justification for a deeper exploration of digits and numerals for their own sake; and perhaps, to sow some seeds for future exploration.